In an area without specific AI regulations, YouTube aims to bring structure. They’ve announced that creators must label videos using AI-generated content, especially music, in a realistic manner.

What do the new YouTube AI guidelines include? Creators must flag their content when using AI-generated material, especially if it looks real. There’ll be stricter rules for sensitive topics like elections and health crises to prevent deepfakes.

Creators must disclose, but YouTube will also verify compliance. Consequences for consistent non-disclosure include losing accounts, suspension from ad revenue, and video removal.

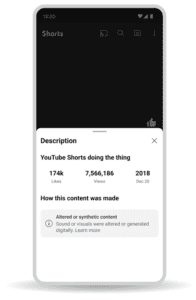

Viewers will notice a label on videos indicating “Altered or synthetic content,” while the platform works on backend verification methods, although specifics are uncertain.

Imagine you find a deepfake video of yourself on YouTube. You have options: you can ask for its removal. However, if it’s satire, parody, or if YouTube thinks it doesn’t resemble you much, it might stay.

Also, in these new rules, the company is allowing music labels to ask for the removal of AI-generated music that copies artists they represent.

For those involved in the music industry, this change prevents people from profiting off artists. Fans who liked AI-generated cover songs will miss out on this enjoyment.

To respect copyright law’s fair use exception, the company will review video context before allowing AI-generated content use. For instance, news reports may be permissible.

YouTube plans to share further specifics about these guidelines in the coming months before they’re enforced.

Meanwhile, other platforms will likely continue establishing rules regarding AI utilization.